New technology, new tools...

new automation strategies?

Automation is one component of improving team performance. Automating repetitive manual tasks gives team members time to solve other problems and come up with innovations that help the business. Automated regression tests give teams fast feedback and let them add new capabilities to their product without fear of breaking anything.

Back in the 90s we had rudimentary automated test tools and dreamed of bigger solutions, tools that not only test system behavior, but also:

- Alert us to visual differences in the UI, emails, PDF files

- Identifies gaps in our test coverage

- Tests through AND behind the UI

- Tests performance with the same test scripts (this actually did exist back then, and was cool)

- Looks at our code and automatically generates regression tests (actually, this existed for unit level tests back then, which is an anti-pattern)

- Let us test emails, documents, and other artifacts in addition to the UI pages

As we approach 2020, we see high-performing teams who can release valuable features to customers continually, with low change failure rate and a short time to restore service. There are many factors that allow teams to achieve this level. Test automation is only one of them, but it is an important one. Time that used to be spent doing manual regression tests can be devoted to more valuable activities such as exploratory testing. Shorter feedback cycles help teams adopting continuous delivery or deployment.

Test automation is hard

Though they see the advantages of automating regression tests, many organizations still struggle with it. If there is any test automation, it’s often the responsibility of QA/test team members who have no coding or automation experience but are trying to automate tests through the UI. The resulting unreliable and hard-to-maintain automation tests may cost the team even more time and energy than if they tested manually.

An organization may have a team of experienced and competent test automators, but they lack control over their environment. Developers on a separate team may be unwilling to instrument code to make it easier to test. They may be turning release feature toggles on and off without warning. A content team may be putting new elements into the UI unexpectedly.

Consider how automated tests have typically been written. If the team designs the system for test automation, then there are well-documented landmarks to find elements or well-defined conventions for the construction and use of landmarks, things like data-attributes, element IDs, or class naming conventions. More commonly, it’s up to the test author to figure out the right incantation of XPath expressions or CSS selectors. This can be time consuming for entire pages and applications, which slows down test creation.

It also leads to automated tests and test automation frameworks only using one or two characteristics of an element to find it. If those few locators change, the test will break even if other useful characteristics of the element remain the same and the overall functionality, behavior, design, and performance of the application are correct. Fixing these then requires going back to the original process of inspecting the page to find the right landmarks to repair the test. The maintenance costs for bespoke automated tests can add up.

People on these teams want to improve, but they’re too busy trying to keep up with manual regression testing to experiment with changes. How can they hope to get fast, reliable feedback on the latest changes to the code base?

Helping teams improve

We’ve been waiting a long time for our flying cars, but the technology that can provide the test automation features listed at the start of this article has started to be put to use. With cloud infrastructure, big data and the ability to process it affordably, artificial intelligence and machine learning, we can build tools that let people with a wide range of skills create useful, reliable, maintainable automated tests.

What may be more important; non-coders on the team can use these tools effectively. Developers are also comfortable with these tools and can add more capabilities to the tests if needed. A lone tester who’s unfortunately isolated from developers can get benefit, and the whole team working together can get lots more.

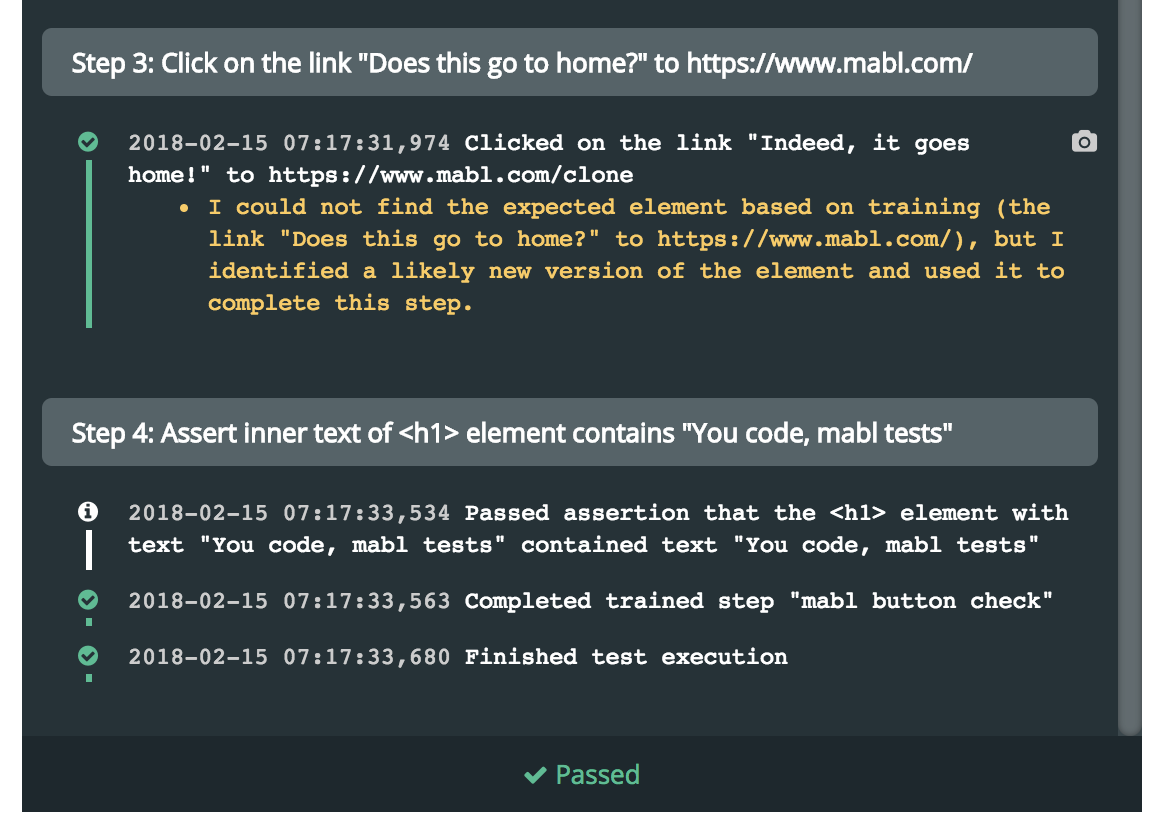

The new breed of testing tools effortlessly captures numerous characteristics of each interaction. Sophisticated algorithms may use all of those attributes to find the element, triangulating it from many angles. When the application changes, they are still able to find the element because they rely on more than just the one or two things that change. And at that point, if the test still passes, they can incorporate the changed attribute into the fingerprint of the element going forward. This greatly reduces the maintenance burden for the automated tests. There is no rework if, for example, your button moves, changes styling, or suggests “Click here” instead of “Click me.”

Screenshot taken from mabl's test output, showcasing how mabl collects enough fallback attributes to find changing elements.

Enabling better practices

Automating simple smoke tests through the UI can be the first step on the path to getting the full benefits of automation. The manual test time saved gives breathing room to learn practices like TDD and testing at other levels such as the API.

Now, imagine that those fairly simple smoke tests could also alert the team about unexpected visual changes! The team can investigate whether those are intentional changes, and if so, re-baseline them. The tests also provide alerts for page load time anomalies, which can give early warning about performance issues. These are valuable built-in benefits.

In addition to testing UI pages, this tool from the future lets you verify emails such as signup confirmation emails. The visual change detection automatically kicks in for these too. The capability to check the content of PDFs that are linked from the UI pages or attached to the emails is also available - and the visual change detection also applies to the PDFs.

This tool we’ve dreamed of for so long enables good automation practices. Among them is the ability to use API calls from the UI tests to set up and tear down of test data, which is a good automation practice that speeds tests and makes them more maintainable. There are many ways to benefit from API requests in the tests. For example, asserting that database values match what shows in the UI. This gives teams options for testing more deeply than what appears in the UI itself.

As teams start automating regression tests or seek to add to existing test suites, they may struggle to prioritize what tests are needed yet. Another benefit of today’s technology is the ability to identify what tests interact with each UI page. We have tools like Segment that let us capture how customers interact with the UI pages in production. The ability to compare this usage data with the current test coverage helps us know where to focus our automation efforts next.

A new generation of tools, new models for strategy?

If you had asked us 20 or so years ago, will there ever be a test automation tool that can be valuable and usable by everyone on a delivery team, we’d have said that’s just wishful thinking. Thanks to the ability to gather, store, process (quickly), and use large amounts of data, tools that let you start simply but learn about many aspects of your product’s quality are here. One test can check a UI feature’s behavior, learn whether there are visual or performance changes, and verify other artifacts such as emails and PDFs. At the same time, it can check behind the UI directly to the server and database via the API. Your team’s developers can choose to add some custom-coded test steps. As you build your automated test suites, the tool helps you track coverage, prioritize what to automate next, and achieve the right balance of tests to give your team confidence for frequent deploys to production.

At the same time, cloud infrastructure now allows teams to run each test in their suite concurrently, each in its own container, so that the feedback is only as slow as the longest test. That is a game-changer for tests through the UI, especially for teams that need to test in multiple browsers.

The test automation pyramid and triangle models have guided a strategy of pushing as many tests as possible down to more granular levels. Teams prefer tests that are quick to write and quick to run, and minimize the tests that are painful to maintain and slow to run. With tests that run more reliably through the UI, run faster in the cloud, and test much more than feature behavior, the new-generation tools might change the automation model shape. Teams can try new strategies to successfully shorten feedback loops with automation and enable continuous delivery or deployment.