Powerful Test Automation Practices: A New Series

Welcome to our series of articles introducing you to tried-and-true practices and patterns that help teams create valuable automated tests. Your team can apply these basic concepts to build up tests that quickly inform you about the outcomes of the latest changes to your software product, with a reasonable investment of time. In this first installment, we explore the value of effective use of assertions in tests.

Part 1: The Importance of Being Assertive

This is not an attempt to correct Oscar Wilde. Nor will it extol the virtues of being less timid. Rather we’re going to talk about the use of assertions in tests.

A Steep Hill to Climb

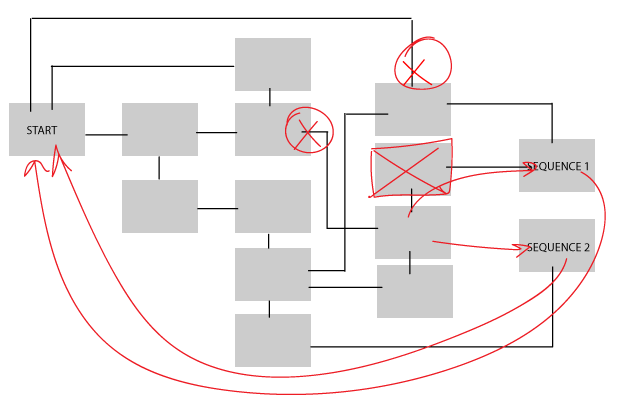

If you’re coming to test automation from manual testing, you have a steep and potentially painful hill to climb. You need to learn one or more programming languages, such as Python, Ruby, Java, JavaScript, Swift, or C#. You need to learn their associated testing frameworks, such as RSpec, PyTest, JUnit, Mocha, or others. If your team is using a domain-specific language (DSL) such as Gherkin to specify tests, and/or testers and coders collaborate to automate, you may not need to learn how to write code. In any case, it pays to get familiar with your product’s architecture, as well as the technologies associated with the applications you’re testing. Perhaps you’ll need to dive more into the details of HTML, CSS, JavaScript, and various application frameworks-- and that’s just for web applications! And then there are continuous integration (CI) and delivery (CD) systems like Jenkins, TeamCity, Spinnaker, Concourse, Codeship, TravisCI, CircleCI, and more. And the need-to-learn list continues with version control, such as git, build tools like maven, gradle, webpack, bundler, and so on.

If you’re coming to test automation from manual testing, you have a steep and potentially painful hill to climb. You need to learn one or more programming languages, such as Python, Ruby, Java, JavaScript, Swift, or C#. You need to learn their associated testing frameworks, such as RSpec, PyTest, JUnit, Mocha, or others. If your team is using a domain-specific language (DSL) such as Gherkin to specify tests, and/or testers and coders collaborate to automate, you may not need to learn how to write code. In any case, it pays to get familiar with your product’s architecture, as well as the technologies associated with the applications you’re testing. Perhaps you’ll need to dive more into the details of HTML, CSS, JavaScript, and various application frameworks-- and that’s just for web applications! And then there are continuous integration (CI) and delivery (CD) systems like Jenkins, TeamCity, Spinnaker, Concourse, Codeship, TravisCI, CircleCI, and more. And the need-to-learn list continues with version control, such as git, build tools like maven, gradle, webpack, bundler, and so on.

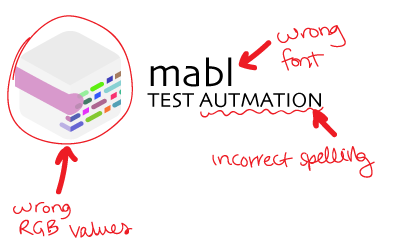

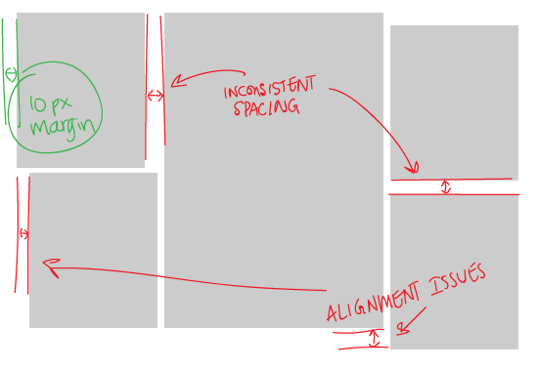

In all of this, it’s easy to forget that you’re still just trying to teach a machine how to execute repetitive tests and checks. It’s easy to overlook the things computers don’t know how to do but that you do easily and naturally, sometimes without even realizing it. By that we mean all the things you consider when determining that software behaves correctly. You look at spelling, alignment, visibility, information content, graphical layout, and more. We have lists of things we’re supposed to check, but there are also the things that we don’t need to be told. Beyond that, the magical thinking machines that are our brains notice when things “just don’t seem right.”

But computers don’t have your checklist, they only do what they’re told to do, and they certainly don’t have a sense of what’s right or not. As a test automator, you need to translate all these aspects of your perception into code. This is where assertions come into play.

The Greatest Test Case

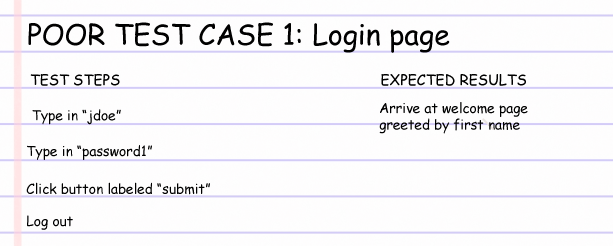

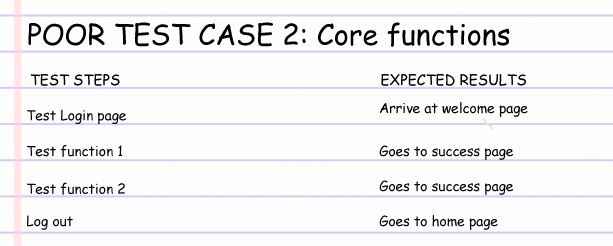

Picture for a moment the best test case you ever saw. Of course, it included all of the steps and data you needed to exercise the application. But if it stopped there, it wouldn’t have been a great test case. Sure, getting through it would tell you that nothing had gone horribly wrong, but it certainly wouldn’t give you a high confidence that it did everything correctly.

The great test case also includes lists of the behaviors and presentation you should expect during and after each step. These expectations might have ranged from simple reminders to detailed descriptions. Either way, they gave you a checklist of the most important things to verify for each step. Where relevant, they probably included the methods you could use to verify them. Modular test cases also include the expected state of the application before and after the test case as a whole so that you can put them in the right context when combined with other test cases.

Breaking Down Your Perceptions

In test automation, you verify each of these elements that a great test case checks with assertions.The first big challenge is simply to be conscious of the things you may not have been explicitly conscious of when you were evaluating if it “just looked right.” This is not a technical challenge, which is why it’s easy to overlook when faced with the more obvious technical challenges of automation.

Even with an amazing test case, you supplement what’s written with your own observation. Here are a few categories and examples of things that can be assertions:

|

|

|

|

|

|

|

|

|

|

Each of these can be broken down into smaller elements or described in more detail. Color may be described with a name or an RGB value. Size could be in pixels, ems, rems, inches, millimeters, points, and more. Alignment can be represented by equivalence of coordinate dimensions. And so forth.

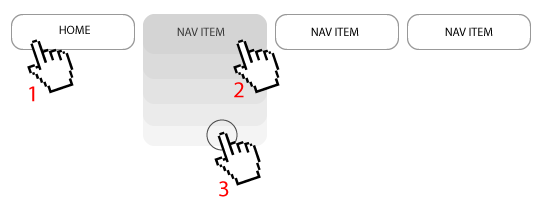

Conquer the Hill

Assertions are feedback. Take a look at any automated UI tests you’re currently using. If you have a UI test that navigates to a new page but doesn’t verify that it has landed on the correct page, that could lead to problems as the test proceeds. Use assertions to make sure your UI pages meet your team’s visual and design quality standards. Use them to check error handling - does the UI return the correct message? Does it put the user in the right place? Is everything happening in an acceptable time frame? Make sure the state of various components in your application change as expected via assertions.

Asserting to confirm that the application is up to snuff in all those different quality attributes can give you confidence that each new change to the product gives some value to the customer. These checks will pave your way through that painful test automation learning curve.

In part 2, we dive deeper into how to formulate assertions for optimal feedback. We invite you to post any questions you have in the comments here!

Meet the authors:

|

Lisa Crispin

|

Stephen Vance

|

testing advocate at mabl, author, donkey whisperer

testing advocate at mabl, author, donkey whisperer mabl software engineer,

mabl software engineer,