Integration testing is the phase in the deployment testing process in which a software application is tested in its entirety, using a fully operational version of all the dependency components. Whereas in code dependencies development, unit tests are mocked out, code dependencies in the integration testing phase are fully operational. Integration testing is the place where the code and all its parts are verified to work according to expectation. Integration testing is where everything comes together. It’s the place where all the functionality of the application is tested. Thus, all the code needs to covered by tests and as does all the program flow. Some smoke testing takes place afterwards, but for all intents and purposes, integration is the last stop on the way to production release.

Understanding the Scope of Testing

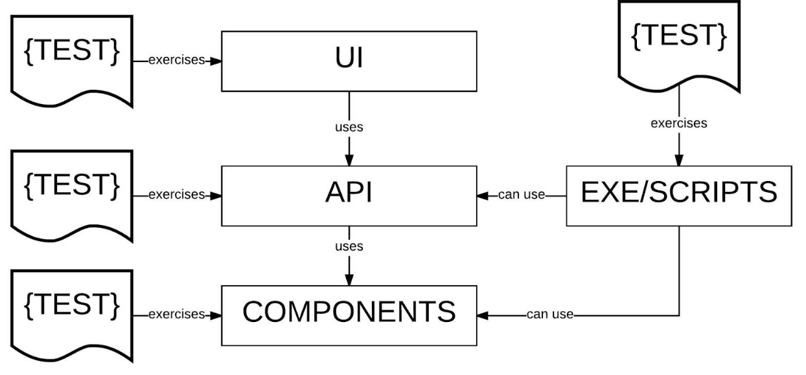

The first order of business in Integration Testing is establishing the boundary of the code under test. For example, if the purpose of the Integration Test is to test the functionality of user interaction with an application, the boundary of testing will be the applications Graphical User Interface (GUI). That testing boundary will be the application’s web pages viewed via a browser. In the case of native applications running on a mobile device or desktop, the boundary will be the application GUI directly on the operating system.

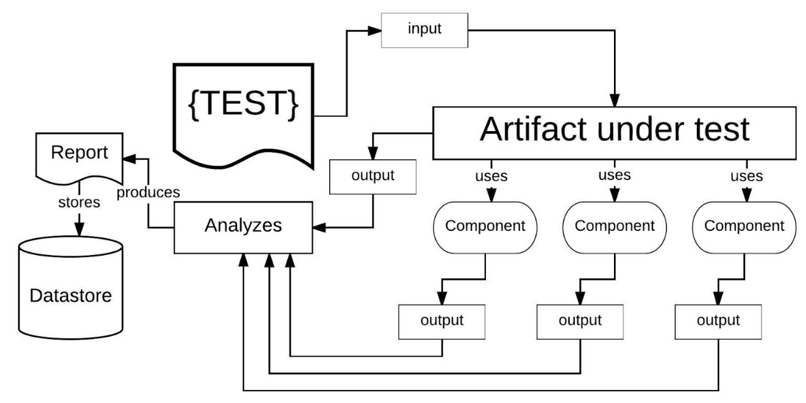

An Integration Test verifies the explicit behavior of an artifact as well as the implicit behavior of an artifact’s constituent components

When Integration Testing an API, the boundary will be endpoints published by the given API. The boundary of Integration Testing for a component that has constituent dependencies are the properties and methods that of the component, should the component be an object oriented binary. If the component is an executable file or script, the boundary of Integration Testing is the command line invocation of the script including its parameters.

Choosing the Right Tool for Integration Testing

Determining boundary is important because the boundary of the test will be a deciding factor for choosing tools to do the testing. For example, if the Integration Test boundary is a set of browser based web pages, you can use mabl’s testing capabilities. If the boundary is native UI, you can use tool such as XUITest for iOS, Espresso for Android, or Test Studio for Window Presentation Foundation testing.

API Integration testing can be facilitated using any framework or technique that can access an API’s endpoint via HTTP. For example, Node developers can use Mocha/Chai to exercise the endpoint. JMeter provides a language agnostic way to exercise an endpoint. Python developers can create tests that exercise an API url using Request in conjunction with the unittest library to assert responses. Don’t be misled by using a unit testing library within the scope of Integration Testing. In this case, the unit test library is being used as a test runner. Analyzing test results is another story which we’ll discuss later on in this article.

When it comes to performing Integration Testing on binary components such as jar files, .NET Dlls or Node and Python packages, things become more specific to the language at hand. For Java jar files you can use TestNG. Using MSTest is a good tool for Integration Testing .NET DLLs. Mocha/Chai can be used as the test runner for doing Integration Tests on Node Package. The same is true of unittest for Python. Again, in these cases the tools are being used as nothing more than a test runner. There is a whole lot more work to be done when in terms of gathering test results and analyzing the information.

Collecting and Analyzing Test Results

In the scheme of things, designing and running an Integration Test is about half the work involved in performing an Integration Test. Getting and analyzing all the data relevant to the testing at hand makes up the other half.

The type and breadth of data you are going to collect depends on if the Integration Test is Black Box, Gray Box or White Box.

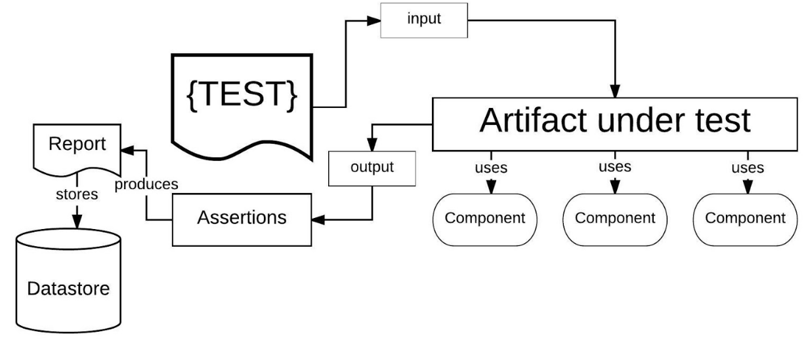

Black Box Testing

In Black Box testing, the way the code works is hidden from the test. The only things apparent is the data that goes into a component and the data that comes out. Thus, integration tests will send in some data and verifies that the output conforms to the expectations defined in the test plan. Therefore, a good deal of thought needs be given to the breadth of testing making sure that all good and bad behaviors are anticipated.

Everything but the input and output of an artifact under test is apparent and subject to verification in a Black Box Test

Gray Box Testing

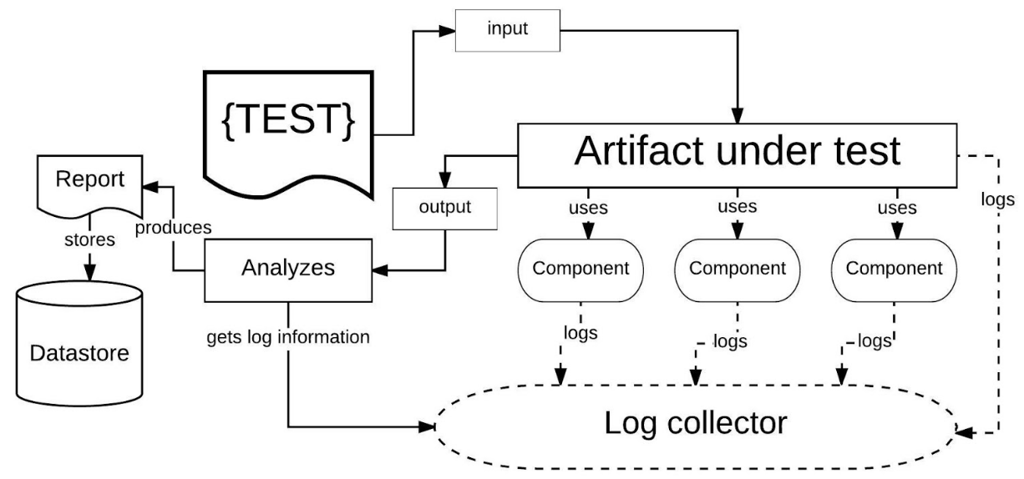

In Gray Box testing, the internals of the code are still hidden from test. However, in addition to providing input and evaluating output, in a Gray Box situation the system emits information during the test that can also be gathered and analyzed to verify that behavioral expectations are met. For example, system logs might be considered as information important to the Integration Test. Thus, log data will need to be gathered and the test plan will need to incorporate log data along with test result data.

In Gray Box Testing input and output data is apparent, but also data emitted and recorded by constituent components can be collected for analysis.

In Gray Box Testing input and output data is apparent, but also data emitted and recorded by constituent components can be collected for analysis.

For example, an Interaction Test can key on a correlationId that traverses all component logging to gather information to verify that all components in a transaction behaved as expected.

White Box Testing

In White Box testing, everything is apparent. Thus, an Integration Test will reveal not only data in and data out, but also the behavior of all the code in all the components in play. For example, a White Box Integration Test is able to report the code coverage of all the components in play as well as query behavior against relevant datastores, to name a few items.

White Box Integration Tests have access to and verify all of the parts relevant to the artifact under test.

Creating an Integration Test Plan and Executing It

No matter, the type of test - Black, Gray, or White Box - a consistent test plan needs to be created and followed. Just planning to create some automation scripts and run them is not enough. Some aspects of integration testing can be easily automated, particularly those Black Box tests from the API layer down. Some UI Integration testing is easy to automate, if the UI is simple and the test is Black Box in nature. But, in the Gray and White Box domains, more human intervention is required. There is simply too much information to gather and that information can be quite elusive. For example, tracking behavior along the execution path of an API endpoint invocation as workflow logic travels among a variety of distributed components in disparate platforms.

Putting It All Together

Integration Testing is a critical part of the Software Deployment Lifecycle. Integration Testing picks up where unit testing leaves off. Whereas a unit test will mock out all but the essential behavior of the unit under test, usually a function, Integration Testing expects fully functioning software at every level.

Integration Testing is more than running a test and analyzing a single response. Depending of the scope and type of testings -- Black, Gray or White Box -- collecting test result information can be extensive and at times, elusive. Thus, having a consistent test plan in place, one that describes what is to be tested as well as who or what is to do the testing, is important. Sometimes automation can perform the testing. Other times, Integration Test execution and analysis is done better by humans. Lastly, test results must be stored and distributed in a predictable manner, one that lends itself easily to historical analysis.

Integration Testing must be thorough and rigorous. Integration Testing is the critical gate through which code must pass on its way to production. A well designed, well executed Integration Test is one of the best ways to catch problems early on and thus ensure that quality code is released quickly and cost effectively.